I am going to give you a very honest disclaimer to you from the outset of this post: I am not completely on board with parental control apps.

If you’re looking for a post that supports the use of parental control apps, you probably won’t find what you’re looking for here. Equally, you also won’t find a post that gives you a fear-mongering sense of overwhelm that parental apps are the spawn of Satan.

That opening might be a surprise to many of you.

To anyone who has read my blog before, who follows me on social media, or who knows my real identity, you’ll know that I was groomed as a teenager. And a lot of that grooming happened online.

You’ll also know that I’m far from being ‘anti-tech.’

When I was groomed, it was the days before Instagram, Snapchat, and TikTok, but we did have Facebook (albeit in a version that most people wouldn’t recognise in its current form), MySpace, MSN Messenger, chat rooms and text messaging. And even as someone who was groomed using some of the platforms and methods I’ve just mentioned, I still don’t think that parental control apps are the solution to child grooming and they are something that we do not use with our children.

But if you bear with me, I think you’ll start to see why these ‘amazing’ apps are not all that they’re cracked up to be.

Why?

Don’t I want them to avoid what I experienced?

Of course I do.

And I also get that in an age where primary-aged children are able to access sites such as PornHub and children are being groomed in record time online, there’s a need to protect children and teens in this online world.

But these apps are (in my opinion), not the way to prevent this harm from occurring.

Let me explain why…

Understanding Parental Control Apps

The idea of parental control apps is that they are designed to monitor and manage children’s digital activities. Popular examples, which will look at more closely later in this post, include:

- Qustodio

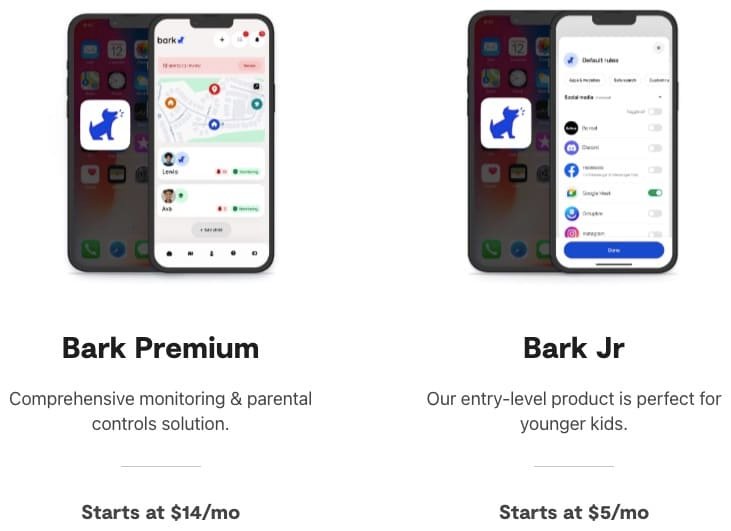

- Bark

- Net Nanny

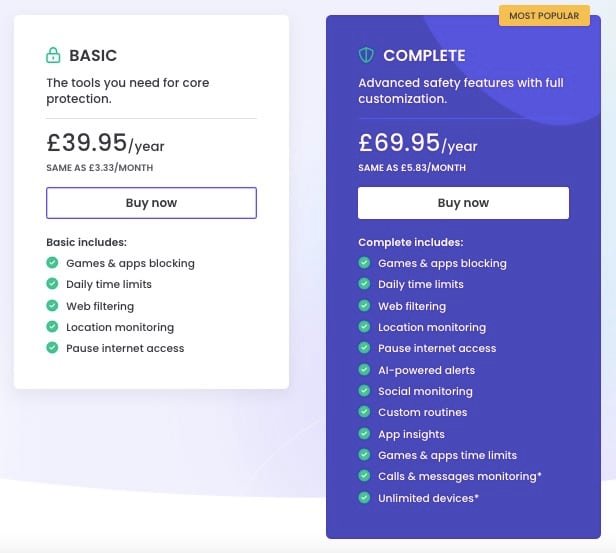

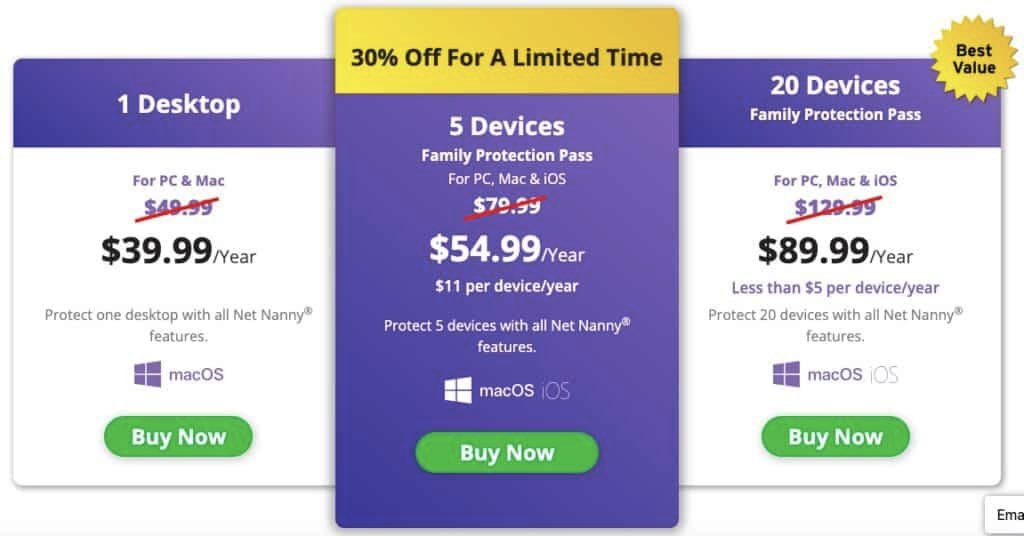

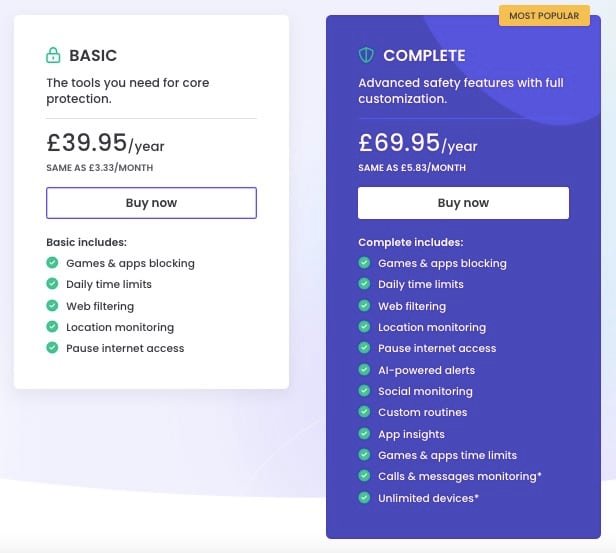

Each works in its own way, but overall, they have functions to enable parents to filter content and manage a child’s screen time on a device or devices. Pricing for these apps (as of 2025) starts from less than £5 per month.

Qustodio Pricing

Bark Pricing

Net Nanny Pricing

Popular apps like Qustodio, Bark, and Net Nanny offer features such as content filtering, screen time management, activity monitoring, and location tracking.

For instance, Qustodio provides comprehensive monitoring, including web filtering and screen time controls. Bark utilizes machine learning to detect potential issues like cyberbullying or suicidal ideation in children’s online interactions. Net Nanny offers dynamic internet filtering and real-time alerts for concerning activities.

While these features aim to protect children, it’s essential to scrutinize the broader implications of their use.

The Billion-Dollar Industry Behind Parental Control Apps

If I had to choose one major point out of the entire post as to why I don’t like these apps, it’s this: Rather than targeting and dealing with the issue of abusers, an entire industry has been created and is generating billions of dollars pitching a ‘solution.’

Surely if these companies were truly invested in child protection, they would be plugging their investments into catching sex offenders and petitioning for legislation changes to higher the conviction rates, rather than preying on parents’ pockets who are desperate to keep their children safe.

As a company, you cannot seriously believe that you are doing good when you are generating millions of dollars off of scared parents who are desperate to keep their children safe, whilst knowing that the conviction rates for sex offenders is at a disturbing low. How on earth did we get to a point where children’s safety was so commercialised? Dare I go as far as saying that we’ve created a lucrative industry because it’s easier than sorting a societal problem? Yes, yes I would.

Statistically, sex offenders (be them groomers, rapists, child molesters, etc) walk around in society and we’d never know. I’ve been very open in the past about how the two people who assaulted/abused me will never be investigated or received prison time for what they did. There are statistics that tell us that we all know a sex offender without realising it.

Yet these apps continue to peddle their wares as if they are the solution to a problem that some of them are funding, or that they are happy to take parent’s money to ‘solve’ an issue, without actually tackling the issue in hand.

Why?

Because lobbying Parliament for legislation changes and campaigning for tougher sentences on sex offenders will not increase the size of your bank account.

It’s so unethical to make money out of such a serious issue.

And I’ll give you this information for free: a lot of the investing companies into these parental control apps, also fund the platforms that cause these problems in the first place.

Selling Features That You Can Get for Free

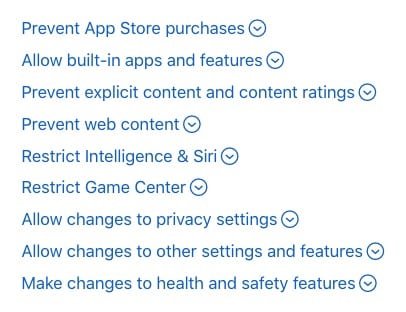

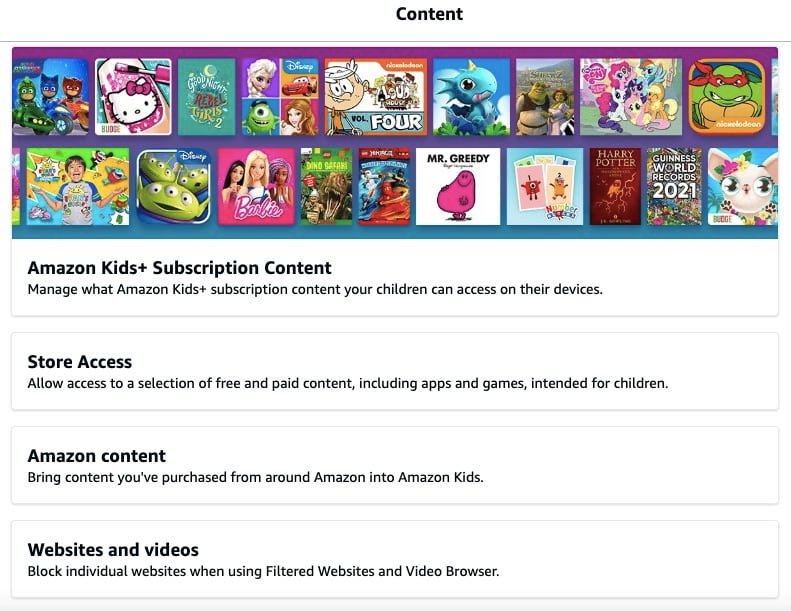

Qustodio has two pricing plans, starting from £39.95 per year. I’m happy to be proven wrong, but from what I can see on the table below, most devices can do these things without paying for an app.

For example, in Amazon Kids (Kindle), you can block games and apps, set daily time limits, filter websites, and pause internet access. Apple devices can also do these things, and if your device doesn’t, most internet routers that are already in someone’s house can also do these things.

I’m therefore unsure as to why anyone would need the Basic plan, and it irks me that a company is profiting from someone’s naivety to what their devices can already do.

AI Powered Alerts

Here’s the next problem I have; ‘AI-powered alerts.’

Many parental control apps state that they use AI to generate alerts to parents about their child’s online activity.

My question is this: How is AI creating these alerts? What information is the app reading about your child’s online activity to know what to alert the parent to? What information about your child are you inadvertently feeding the AI machine in the name of internet safety?

We already know that there are cases where children have been sexually abused by AI; surely, this use of AI is just continuing to train AI?

Incidentally, I couldn’t find anything in Qustodio’s Privacy Policy or Bark’s Privacy Policy that told me how this use of AI actually worked and how my child’s information about their online activity was being stored and processed for the purposes of these AI alerts. I looked at other parts of their websites, but was just met with marketing as to how beneficial these AI alerts were and presented with a button to start my subscription to their services.

Calls and Messages Monitoring

The next issue that I have with these apps is around the Calls and messages monitoring function. This is offered by Bark, Qustodio.

Yes, we want to keep our children safe, but I have an issue with this for 3 reasons:

Some Secrets Are Ok to Keep

What if the child just wants to do something harmless such as buying a present for a parent’s birthday?

Some secrets are safe and are ok to be kept. This function doesn’t necessarily allow for ‘good’ secrets to be kept.

It’s Not Just Strangers Who Abuse Online

We assume that it’s strangers online who will groom and abuse children online.

The statistics tell us differently.

Call and messaging monitoring could mean abuse is missed because parents don’t see the need to investigate calls and messages between relatives and family friends whom they trust.

Worsening Controlling and Coercive Behaviours

Monitoring someone’s calls and messages means that an abuser could intensify their control over a child.

Think about it; if a parent is abusing a child, monitoring their calls and messages means that they could prevent a child from telling someone about that abuse. Equally, they could see through other features if a child has been researching information that could help them, for example from Childline, Rape Crisis, NSPCC, or the police.

Note how in the first sentence of this point I said ‘someone’ rather than ‘child.’

This was intentional.

Although these apps are designed to monitor children’s online activity, there’s nothing stopping an abuser using these types of apps to monitor the calls and texts of a partner, or anyone else they are abusing.

Can Children Get Around Parental Control Apps?

Back in the 2000s, we could work around the school internet firewalls to access the websites that the teacher’s didn’t want us to.

Sure, a lot of this was MyScene and Neopets (if you have no idea what I’m talking about then you clearly weren’t a teen in 2002-2004), but the point is that we knew how to do it. We also figured out how to get around the internet blocks on certain keywords when we were doing Biology projects (use your imagination there).

And whilst I get that internet security has moved on a HUGE amount since we were all watching Lizzy McGuire and Sabrina the Teenage Witch after school, the point is that children and teens are not stupid. If we could work around these security measures then, we must not fool ourselves into thinking that children haven’t also evolved in knowing how to work around parental controls.

You only need to take to Reddit to see thousands of posts about how to work around parental controls in general, and also on specific apps.

And before you think that you’ve blocked Reddit, or keywords about blocking certain searched, I’ll tell you that it likely doesn’t matter.

You can’t block searches carried out at other people’s houses, or on friends’ devices. If a child or teenager

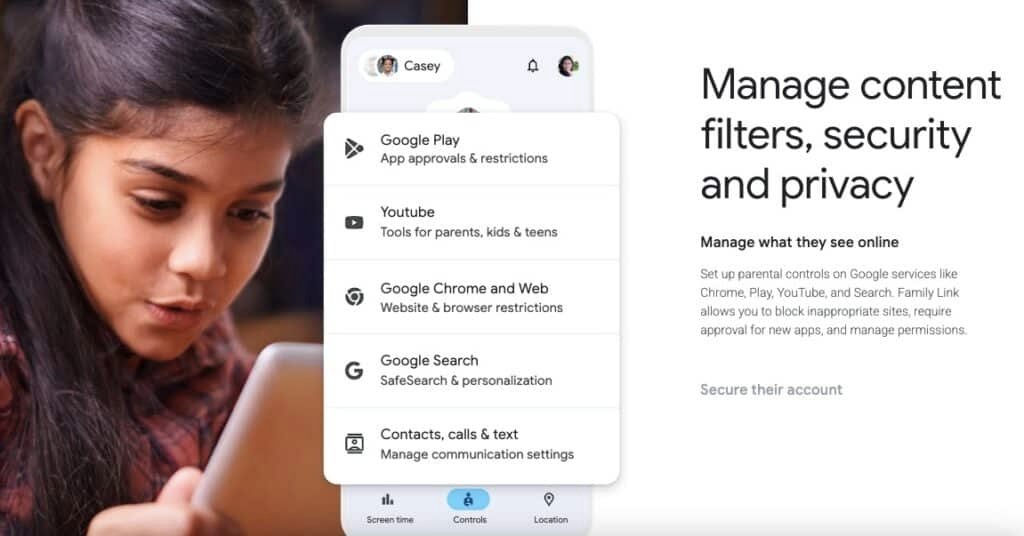

What About Free Parental Control Apps?

So far in this post, I’ve only focused on paid for parental control apps. So what about the free versions? Surely I don’t have a problem with them because they’re not profiting off of this problem?

Sorry, but I’ve got a problem with them too.

Apps such as Google’s Family Link are more limited than apps such as Qustodio, Bark and Net Nanny; for example, you can’t monitor your children’s text messaged and calls. However, Google who created this app also invested heavily into Character.AI. And if you’ve not heard of this, it’s an AI platform that encouraged a teenager to attempt to take his life. Tragically, his attempt was successful and he died by suicide. Sewell Setzer III was 14 years old.

How can a company who is part of the problem also be part of the solution?

Sidenote: I’ve been told colloquially that Google invests money into the NSPCC as well, but I’ve not been able to find evidence for this online.

And yes, this post is more geared towards online abuse in terms of sexual abuse; there are documented cases of AI sexually abusing children by engaging them in sexually explicit conversations. Character.AI is not part of these documented cases as far as I could find.

Can We Protect Our Children Online Without Parental Control Apps?

This is a tough question that I don’t think anyone has the answer to.

However, what I will say is this:

The person who groomed me for the purpose of sexually abusing me did so on several different platforms. This is important because we know that perpetrators commonly have children interact with them on more than one platform to ensure access. Equally, none of the parental control apps that I’ve looked at have mentioned monitoring emails. Whilst emails aren’t THE method of communication that they once were, they are still a way of abusers contacting children and I haven’t seen one parental control app acknowledge this.

The person who groomed me was also in a position of authority, and due to my age, I’m not convinced that us contacting each other would have raised suspicions.

I cannot stress enough that people who groom and abuse children are more likely to be labelled as trustworthy, kind, generous, going above and beyond, etc because this labelling hides them from what they are capable of. We mustn’t assume that all online child abusers are sitting in a basement with the lights off.

I do think, though, that open communication needs to form part of a solid parenting strategy when it comes to online safety.

Children need to know that they can discuss their online experiences with a trusted adult, when and if something goes wrong. We’ve always stressed to our children that if they don’t feel able to tell us about something that’s happened online, then there are other people. I often then ask them who they could go to, rather than telling them. It’s interesting the names of adults that come up, but it’s good to know who they trust in their lives.

Equally, children need to understand that they won’t be punished for ‘making a mistake’ or ‘doing the wrong thing’ online. We think about teens in particular reaching out for help if they’re drunk or feel unsafe at a party. We need to encourage this communication for their online use as well.

And yes, communication in itself isn’t going to solve the issue of protecting children and teens from online grooming and abuse.

But neither will parental control apps.

It’s about figuring out what works best for your family and your children, and being open to the fact that there isn’t one solution, nor is there a right solution, to online safety for children. We need to empower children to know what isn’t acceptable and to be able to speak up. We cannot and must not rely on giving money to app companies who, at the end of the day, their goal is to make a profit, and expect this to be enough to protect our children online.

Final Thoughts

Hopefully, you haven’t got to the end of this post thinking that I’m completely ‘anti-tech.’

I love technology and am well known in both my professional and private life for integrating tech where I can. It’s the 21st century and I don’t think that anyone can be properly ‘anti-tech’ at this point in time.

However, I think it’s important to remember that tech does not and cannot provide the solution to every parenting and safeguarding issue, especially when a lot of these companies might have investors that are also investing in companies that create the problems that parental control companies are seeking to resolve. Additionally, I think there’s a hugely ethical dilemma around monitoring your child’s activity and also for company’s to profit from sex offenders, rather than tackle the issues that these people create in the first place. And remember, this is coming from someone who was groomed online for the purpose of sexual abuse.

Parenting is ridiculously tough to navigate and nobody has all of the answers, least of all the right ones.

However, I firmly believe that pouring money into these apps is not the way to go and we must find more ethical ways to protect young people online.

And that starts with the root cause of the issue: sex offenders who are hiding in plain sight. Judicial reforms. Tougher sentencing. Trauma-informed practice. We have the solutions, but to be able to put them in place, we have to acknowledge that we have a problem with society first.

A final note on this post: Please remember that everything I have written here is shared based on my own research and interpretation of the articles and reports I could find. It’s important that you do your own research to help you make the choices that are right for your children and your family.